Sign languages, which are the primary languages of Deaf and Hard of Hearing, are visual languages expressed by hand and face movement. In recent times, interpreters have facilitated the communication between members of the Deaf and hearing communities. There are more than 7000 languages that are used in the world today, and out of them at least 300 different forms are sign languages. Language Translation has been widely used to make the world a more accessible place for everyone, and with the rapid progress of computing technologies, performing an automated interpretation has been a goal and dream of many people.

Speech Recognition by computers, commonly known in the industry as Automated Speech Recognition (ASR), had a modest start a few decades ago, and saw slow, but steady progress until a few years ago. With the availability of computing power, advancement of AI Algorithms, and the availability of vast amounts of public data, Speech Recognition has been rapidly advanced in the past few years, to the point that in many languages it can closely match the accuracy of humans in recognizing spoken languages. Not only hearing people had the benefit of using ASR, but this has been a big help for the Deaf community as well. This made the contents less expensive with providing close captioning, live transcription of meetings, and even with everyday use of conversations with hearing people through mobile apps.

However, automated interpretation of signed languages has remained elusive. Earlier efforts have involved using specialized devices such as gloves with sensors, or gloves with special-colored patches. These methods tried to avoid technological challenges but fundamentally misunderstood what’s acceptable and usable for the Deaf community.

Even though recent advancements in AI technologies have enabled many exciting applications such as Face Recognition and Autonomous Driving, automated sign language recognition and translation have proven even more challenging.

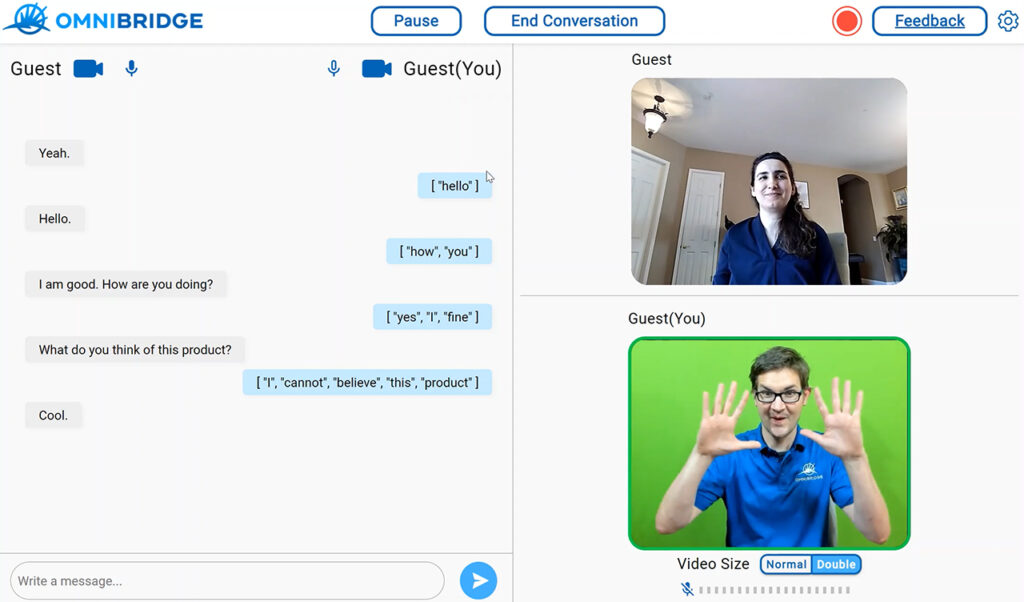

In the last two years and half, the OmniBridge team has been developing an AI-based solution that translates the real-time signing videos into English. The app is not only capturing the ASL; however, it receives the voice from English speaker and transforms it to text for the Deaf user. With these advanced solutions, we are supporting a bi-directional communication for both Deaf and hearing user.

Many of our early testers did interact with our solution during many events throughout the U.S. The feedback received was powerful and constructive. We have learned so much during those early interactions with Deaf and Hard of Hearing communities. We have gained a valuable understanding of the Deaf culture and their history, and we are applying this feedback and learning into our design and advancement of the OmniBridge product.

We are achieving this goal through careful selection and advancement of state-of-the-art Computer Vision, Natural Language Processing and AI models, carefully designed, but high-volume data collection, and by putting together a team of Deaf and hearing people who are passionate about our mission.,